def get_rays(H, W, K, c2w):

i, j = torch.meshgrid(torch.linspace(0, W-1, W), torch.linspace(0, H-1, H)) # pytorch's meshgrid has indexing='ij'

i = i.t()

j = j.t()

dirs = torch.stack([(i-K[0][2])/K[0][0], -(j-K[1][2])/K[1][1], -torch.ones_like(i)], -1) # normalized coordinate

# Rotate ray directions from camera frame to the world frame

rays_d = torch.sum(dirs[..., np.newaxis, :] * c2w[:3,:3], -1) # dot product, equals to: [c2w.dot(dir) for dir in dirs]

# Translate camera frame's origin to the world frame. It is the origin of all rays.

rays_o = c2w[:3,-1].expand(rays_d.shape)

return rays_o, rays_drays_d = camera coordinate -> world coordinate rotation [400,400,3]

rays_o = camera coordinate -> world translation [400,400,3]

def render(H, W, K, chunk=1024*32, rays=None, c2w=None, ndc=True,

near=0., far=1.,

use_viewdirs=False, c2w_staticcam=None,

**kwargs):

"""Render rays

Args:

H: int. Height of image in pixels.

W: int. Width of image in pixels.

focal: float. Focal length of pinhole camera.

chunk: int. Maximum number of rays to process simultaneously. Used to

control maximum memory usage. Does not affect final results.

rays: array of shape [2, batch_size, 3]. Ray origin and direction for

each example in batch.

c2w: array of shape [3, 4]. Camera-to-world transformation matrix.

ndc: bool. If True, represent ray origin, direction in NDC coordinates.

near: float or array of shape [batch_size]. Nearest distance for a ray.

far: float or array of shape [batch_size]. Farthest distance for a ray.

use_viewdirs: bool. If True, use viewing direction of a point in space in model.

c2w_staticcam: array of shape [3, 4]. If not None, use this transformation matrix for

camera while using other c2w argument for viewing directions.

Returns:

rgb_map: [batch_size, 3]. Predicted RGB values for rays.

disp_map: [batch_size]. Disparity map. Inverse of depth.

acc_map: [batch_size]. Accumulated opacity (alpha) along a ray.

extras: dict with everything returned by render_rays().

"""

if c2w is not None:

# special case to render full image

rays_o, rays_d = get_rays(H, W, K, c2w) # [H,W,3]

else:

# use provided ray batch

rays_o, rays_d = rays

if use_viewdirs:

# provide ray directions as input

viewdirs = rays_d

if c2w_staticcam is not None:

# special case to visualize effect of viewdirs

rays_o, rays_d = get_rays(H, W, K, c2w_staticcam)

viewdirs = viewdirs / torch.norm(viewdirs, dim=-1, keepdim=True)

viewdirs = torch.reshape(viewdirs, [-1,3]).float()

sh = rays_d.shape # [..., 3]

if ndc:

# for forward facing scenes

rays_o, rays_d = ndc_rays(H, W, K[0][0], 1., rays_o, rays_d)

# Create ray batch

rays_o = torch.reshape(rays_o, [-1,3]).float() # [N_rays,3]

rays_d = torch.reshape(rays_d, [-1,3]).float() # [N_rays,3]]

near, far = near * torch.ones_like(rays_d[...,:1]), far * torch.ones_like(rays_d[...,:1])

rays = torch.cat([rays_o, rays_d, near, far], -1) # [10234, 8], [1024, rays_d+rays_o+near+far]

if use_viewdirs:

rays = torch.cat([rays, viewdirs], -1) # [1024, 11]. [1024, rays+viewdirs]

# Render and reshape

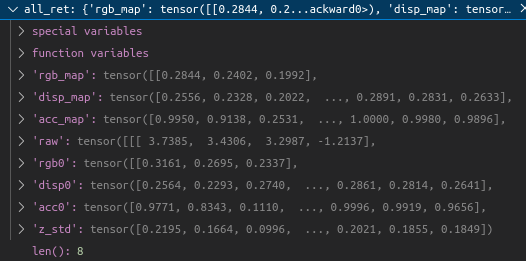

all_ret = batchify_rays(rays, chunk, **kwargs)

k_sh = list(sh[:-1]) + list(all_ret[k].shape[1:])

all_ret[k] = torch.reshape(all_ret[k], k_sh)

k_extract = ['rgb_map', 'disp_map', 'acc_map']

ret_list = [all_ret[k] for k in k_extract]

ret_dict = {k : all_ret[k] for k in all_ret if k not in k_extract}

return ret_list + [ret_dict]near, far = [1024, 1]

rays = 주석

all_ret = {'rgb_map': [1024,3], 'disp_map': [1024], 'acc_map' : [1024], 'raw': [1024, 192, 4], 'z_std': [1024]}

def run_network(inputs, viewdirs, fn, embed_fn, embeddirs_fn, netchunk=1024*64):

"""Prepares inputs and applies network 'fn'.

"""

inputs_flat = torch.reshape(inputs, [-1, inputs.shape[-1]]) # flat = torch.Size([65536, 3])

embedded = embed_fn(inputs_flat) # embedded => torch.Size([65536, 63])

if viewdirs is not None:

input_dirs = viewdirs[:,None].expand(inputs.shape) # view dir => [1024,3]

input_dirs_flat = torch.reshape(input_dirs, [-1, input_dirs.shape[-1]]) # input_dirs => [1024,64,3] flat => torch.Size([65536, 3])

embedded_dirs = embeddirs_fn(input_dirs_flat) # [65536,27]

embedded = torch.cat([embedded, embedded_dirs], -1) # embedded => torch.Size([65536, 90])

outputs_flat = batchify(fn, netchunk)(embedded) # output_flat => torch.Size([65536, 4])

outputs = torch.reshape(outputs_flat, list(inputs.shape[:-1]) + [outputs_flat.shape[-1]])

return outputs # [1024, 64, 4]embedded = pts

embedded_dirs = ray_direction

class NeRF(nn.Module):

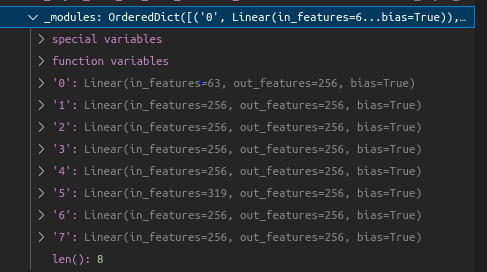

def __init__(self, D=8, W=256, input_ch=3, input_ch_views=3, output_ch=4, skips=[4], use_viewdirs=False):

"""

"""

super(NeRF, self).__init__()

self.D = D # 8

self.W = W #256

self.input_ch = input_ch # 60

self.input_ch_views = input_ch_views # 27

self.skips = skips

self.use_viewdirs = use_viewdirs

self.pts_linears = nn.ModuleList(

[nn.Linear(input_ch, W)] + [nn.Linear(W, W) if i not in self.skips else nn.Linear(W + input_ch, W) for i in range(D-1)])

### Implementation according to the official code release (https://github.com/bmild/nerf/blob/master/run_nerf_helpers.py#L104-L105)

self.views_linears = nn.ModuleList([nn.Linear(input_ch_views + W, W//2)])

# ModuleList( (0): Linear(in_features=283, out_features=128, bias=True))

### Implementation according to the paper

# self.views_linears = nn.ModuleList(

# [nn.Linear(input_ch_views + W, W//2)] + [nn.Linear(W//2, W//2) for i in range(D//2)])

if use_viewdirs:

self.feature_linear = nn.Linear(W, W)

self.alpha_linear = nn.Linear(W, 1)

self.rgb_linear = nn.Linear(W//2, 3)

else:

self.output_linear = nn.Linear(W, output_ch)

def forward(self, x):

input_pts, input_views = torch.split(x, [self.input_ch, self.input_ch_views], dim=-1)

# x => torch.Size([65536, 90]), input_views => torch.Size([65536, 27]), input_pts => torch.Size([65536, 63])

h = input_pts

for i, l in enumerate(self.pts_linears):

h = self.pts_linears[i](h)

h = F.relu(h)

if i in self.skips:

h = torch.cat([input_pts, h], -1)

if self.use_viewdirs:

alpha = self.alpha_linear(h) # density

feature = self.feature_linear(h)

h = torch.cat([feature, input_views], -1) # 256+27

for i, l in enumerate(self.views_linears):

h = self.views_linears[i](h)

h = F.relu(h)

rgb = self.rgb_linear(h)

outputs = torch.cat([rgb, alpha], -1)

else:

outputs = self.output_linear(h)

return outputs